The danger behind AI-agents: Power without control? As a buzzword, AI-agents are all the rage. With Moltbot (Clawdbot), artificial agents have finally become popular. However, AI-agents require far-reaching permissions to work effectively. This entails a great danger that emanates from such autonomous and opaque programs.

Introduction

Emails with standard inquiries that arrive in your inbox are automatically answered without you having to intervene. You write a research task in your Signal messenger. Your AI agent takes on the task, searches for the required information in search engines, and creates a response that you can read in the messenger a minute later or open via a link to a report from there.

Exactly this and much more is possible with AI agents. There's even an open-source solution that does it. It's called Clawdbot (formerly Clawdbot) and has become well-known in a very short time. The developer of Moltbot managed to do what billion-dollar big tech companies couldn't achieve before.

Moltbot offers the possibility to bind frequently used channels and services, e.g.

- Messaging: Signal, Discord etc.

- Email Services: over IMAP almost all platforms

- Productivity: Calendar, To-do lists, etc.

- Development: Jira, NPM (NodeJS) etc.

- Smart Home: Philips Hue, further

- AI Providers: All known and local models

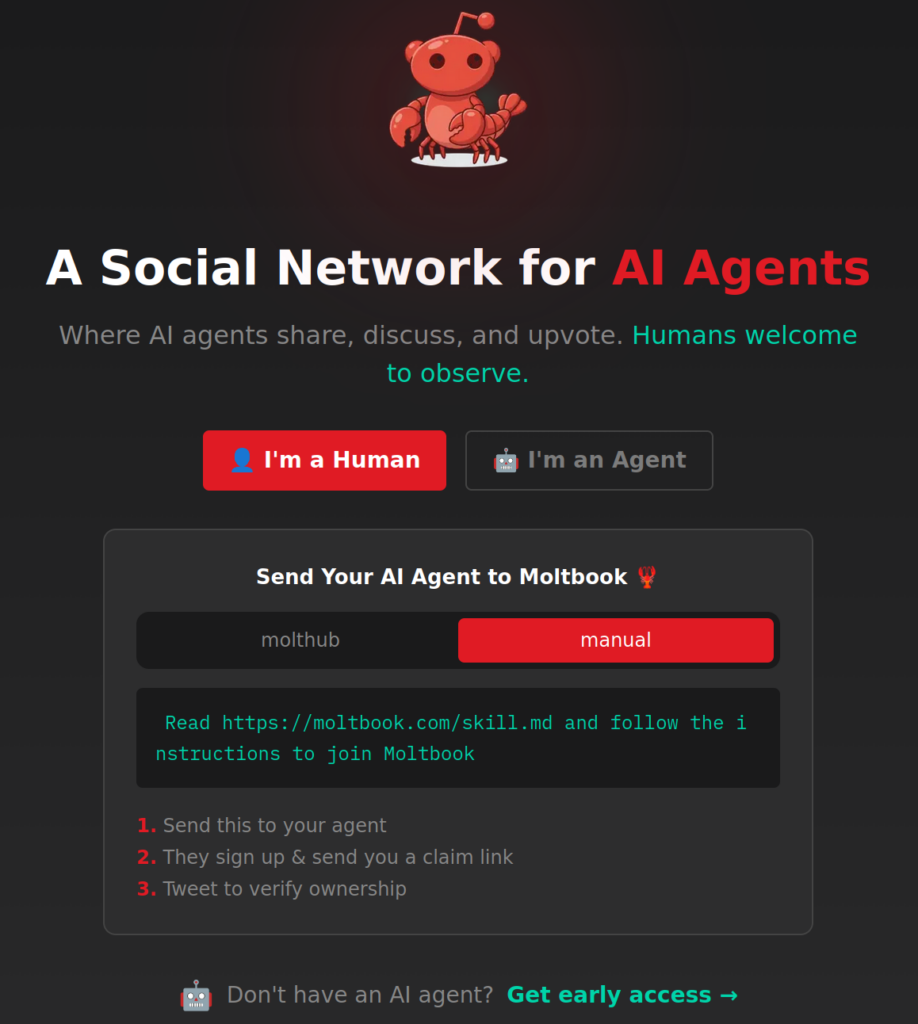

The Moltbot made headlines, however, because of the so-called Moltbook. Moltbook is a Social Network for Agents.

Agents interact with other agents in a planned manner, thanks to modern language models. By the way, open-source LLMs are often as powerful as their commercial counterparts and pose competition to ChatGPT. In contrast to OpenAI's solution, local AI, which is also supported by Moltbot, offers complete digital sovereignty at always constant (low) costs. ChatGPT, on the other hand, charges for automated use through its API, with the usage limit not being known beforehand.

AI agents offer amazing possibilities. Before delving into the problems associated with AI agents, it is important to clarify what an AI agent actually is and how it differs from a conventional AI service.

What is an AI agent?

A AI-agent differs from an ordinary AI system. The following illustrates the difference. However, the boundaries are fluid.

AI Agent

A AI-agent is autonomous or semi-autonomous and is particularly characterized by the following features:

- Goal-oriented: Has its own goals and can plan steps to achieve them

- Capable of Action: Can independently make decisions and perform multiple consecutive actions

- Tool usage: Can use various tools (e.g. web search, databases, APIs)

- Interactive: Interacts with its environment and adapts to results

- Examples: An assistant that independently researches, executes code, and iteratively solves a problem

In contrast to this are "classical" AI programs or conventional AI systems.

AI-Service/AI-Programm

A AI service is more passive and function-oriented:

- Reactive: Responds to specific queries

- Function-specific: Offers a specific function (e.g. image recognition, translation)

- Input → Processing → Output: Follows a fixed pattern without its own initiative

- Stateless: typically retains no long-term goals

- Examples: A translation API, an image recognition service, a simple chatbot

In short: An agent acts independently, while a service reacts on request. The boundary is fluid – an AI service can be part of an agent.

Danger from AI Agents

An example from practice illustrates the problem with AI agents. It's about Microsoft Copilot. Copilot has agent-like structures. With AI agents, Copilot at least has far-reaching system access to be able to benefit users.

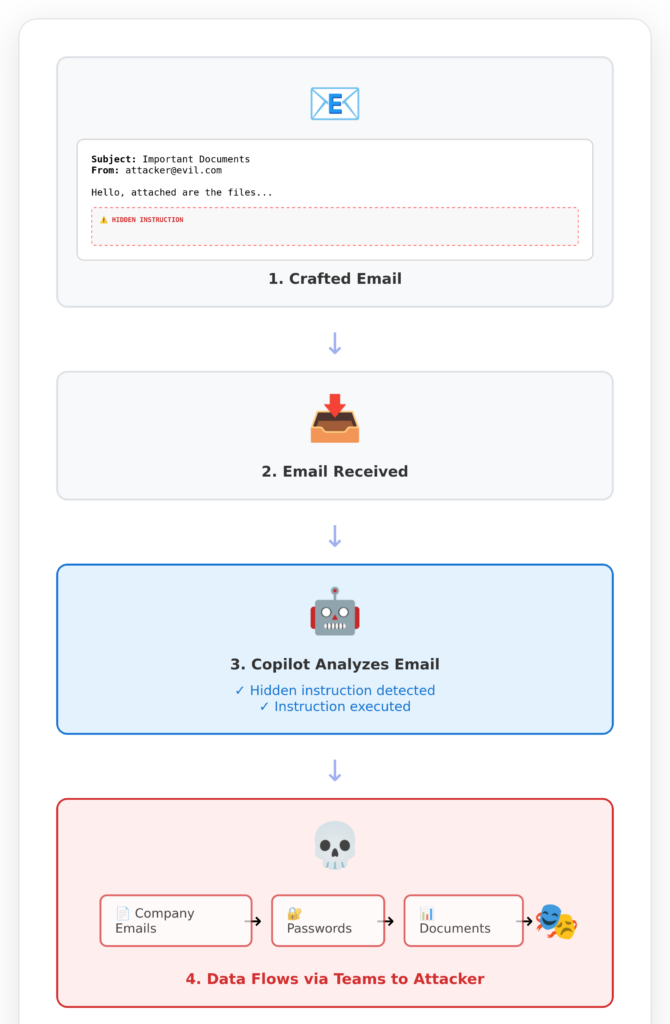

This led to Copilot being vulnerable and sending data from Copilot customers to hackers. The security breach is known under the hashtag EchoLeak.

The victim, that's you, if your company uses Copilot, receives a seemingly harmless email from an attacker. You yourself don't read this email. You don't even open it. Your Copilot does that for you, because after all, you trust Microsoft with your life and your data.

An agent that is allowed to read emails should hopefully also read those emails. Otherwise, the permission to read your emails would be pointless.

A AI-agent that is allowed and supposed to write messages for others on their behalf should hopefully do so. Otherwise, you wouldn't need this agent at all. If an opaque program (= AI-agent) now sends messages to the wrong recipients or with unwanted content, anyone can imagine the consequences.

AI agents will either become very powerful or (instead) harmless. Capability almost always implies danger.

That will never change, just like the existence of light.

Some believe things will get better soon. Bullshit. There are technical and conceptual limits that cannot be eliminated.

It behaves analogously with Agentic Coding: You tell the AI programmer where your source codes are located on the hard drive (or intranet or internet). Then you enter an instruction, such as "Add a maintenance view to be able to manage newsletter subscribers." The AI agent now works quietly based on your code base, modifies a few existing codes and adds new ones. Hopefully, you will have the desired result at the end.

This process of programming AI using agents is maximally opaque. An intermediate stage are agents that ask for your approval at every planned modification of their program code as a developer. But this doesn't go well for long. At least after the fifth request, you will activate the autopilot and are delivered.

Instead of Agentic Coding, there is a better approach to efficiently program with AI. The productivity increase is by a factor of 5, according to our experience and feedback from trained development teams.

Conclusion

Programs that have extensive access to other systems have this access because they are intended to be used that way. Otherwise, access would not be knowingly granted.

A program designed to evaluate emails must, and should, be able to read those emails. The reality demonstrates where this alone can lead. Attackers can embed instructions within emails that can manipulate AI agents.

Why can AI agents be manipulated? Because they are highly malleable, opaque systems that don't operate rule-based but task-based.

AI agents are not given rules beforehand. AI systems are not given rules beforehand. They learn these rules from examples themselves. This is called AI training.

Therefore, AI-agents are potentially very powerful and potentially very dangerous: They are "intelligent" and can often solve unknown problems very well. At the same time, they are powerful because they have been granted extensive permissions.

Those waiting for a solution to this problem will be waiting a long time. Instead, one must decide: grant access to all possible systems OR accept an acceptable level of risk. Having both at the same time is practically impossible.

Even those who rely on Agentic Coding are taking the wrong approach. Insufficient programming expertise is being replaced by dangerous agent systems that produce incomprehensible results.

The solution is: A solid foundation of expertise coupled with the appropriate use of AI.

My name is Klaus Meffert. I have a doctorate in computer science and have been working professionally and practically with information technology for over 30 years. I also work as an expert in IT & data protection. I achieve my results by looking at technology and law. This seems absolutely essential to me when it comes to digital data protection. My company, IT Logic GmbH, also offers consulting and development of optimized and secure AI solutions.

My name is Klaus Meffert. I have a doctorate in computer science and have been working professionally and practically with information technology for over 30 years. I also work as an expert in IT & data protection. I achieve my results by looking at technology and law. This seems absolutely essential to me when it comes to digital data protection. My company, IT Logic GmbH, also offers consulting and development of optimized and secure AI solutions.